For the first part I took a twelve step color wheel and superimposed it over a circle of fifths…

Jack Ox

Jack Ox writes on the analysis of music, in “A Complex System for the Visualization of Music”, that the approach when analysing music can also comedown to whether a piece of music can be “reduced” to a single instrument and if it “is not dependent upon the timbre of different instruments“. This means that music can be analysed phonetically and harmonically. Ox expands on this: a harmonic analysis “would not” yield “meaningful information” for music which depends upon “carefully constructed timbre as its structure“. In addition, for “harmonically based music” Ox developed a system.

“For the first part I took a twelve step color wheel and superimposed it over a circle of fifths, which is the circular ordering of keys with closely related keys being next to each other and those not related directly across from each other. This ordering is the same as the color wheel. I made the minor keys 3 steps behind in an inner wheel, also in emulation of the circle of fifths. As the music modulates through keys, the same pattern occurs with the movement through the colors.“

Harmonic quality is the relative “dissonance or consonance of two or more notes playing at the same moment.” When it comes to silences, Ox questions how this should be appropriately visualised. “Should the silence be read as an empty instant of time, or is it in an equal balance of power with the ’on’ notes?” I think that silence within music has to be treated as any other part. A piece of music should be analysed in its entirety since that is what the creator has presented. For Ursonate, a 41 minute sound poem by Kurt Schwitters, Ox made “very bright, solid” colour using deep reds for longer prolonged parts an greenish yellow for short breaths. The phonetic analysis of Ursonate was done by mapping “how and where” vowels are made in the mouth.

“The list of colors for unrounded vowels comes from the warm side of the color wheel and rounded vowels are from the cool side. As the tongues moves down in the mouth to form different vowels, the color choice moves down the appropriate color list. Vowels formed in the front of the mouth, like ”i” and”e”, are a pure color. Vowels directly behind the teeth, like ”I”, have a 10% complimentary color component, the next step back in the mouth is 20%. complimentary, and so on until the back of the mouth, as in ”o” or ”u”, which has 50% complimentary color in the mixture.”

Ox, Jack, and David Britton. ‘The 21st Century Virtual Reality Color Organ’. IEEE MultiMedia 7 (1 July 2000): 6–9.

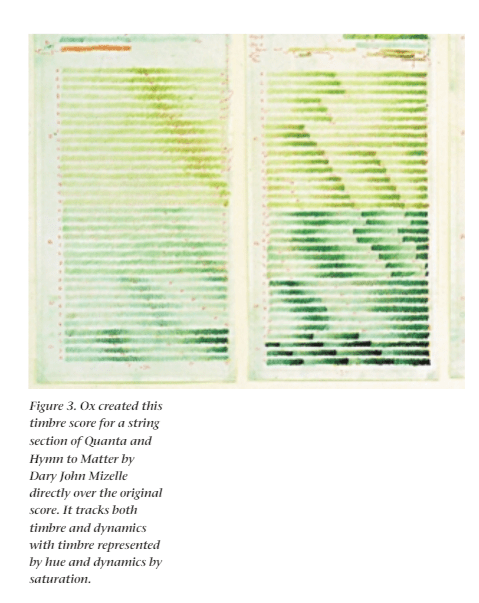

On the “21st Century Virtual Reality Color Organ”, as music starts to play, a 3D image in black an white takes shape over a landscape. As music plays this is then populated with colour and shape as per the instrument’s family. I.e. depending on what instrument is playing, the associated instrument’s shapes and colours that have been predetermined are displayed. The hue of the colour is “based on a timbre analysis of which instrument is being played”. The saturation of the colour “reflects changing dynamics (loud and soft)“. Within the landscape, a high pitch will be higher in space.

For my project, I hadn’t properly considered changing the hue of the colours based on the instruments being played, nor had I thought about approaching the visualisation as a landscape to be populated (I have consistently been thinking about the WinAmp and Windows Movie Maker approach, and even though I might still go for something like this, it is always good to have a different viewpoint).

Looking at phonetic analysis was also an interesting approach for sound files which might just be the human voice. Ox’s use of deep block colours when using this phonetic approach was also interesting, as there still had to be a level of distinction between the sounds even though there were no musical instruments.

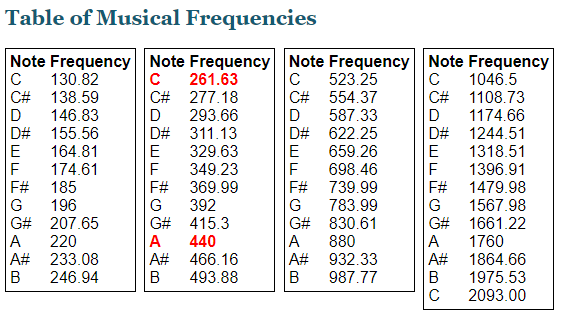

The almost scientific approach with the twelve step colour wheel and the circle of fifths was interesting too, and might be a good basis in which to colour this project. With knowledge of the colour wheel and the circle of fifths, different keys at any one time could be portrayed. I still don’t know how I would do this yet, as without pre-processing it might be hard to determine chord progression without knowing beforehand what frequencies are within the sound file. An idea would be to have all frequencies for all the musical notes (with different pitches) stored within the program beforehand, and then in real-time match the frequency to the note using frequency bands that I have set according to something like…

References

Ox, Jack. ‘A Complex System for the Visualization of Music’. In Unifying Themes in Complex Systems, edited by Ali A. Minai and Yaneer Bar-Yam, 111–17. Berlin, Heidelberg: Springer, 2006. https://doi.org/10.1007/978-3-540-35866-4_11.

Ox, Jack, and David Britton. ‘The 21st Century Virtual Reality Color Organ’. IEEE MultiMedia 7 (1 July 2000): 6–9.